|

| Source |

Calibrating Sensors

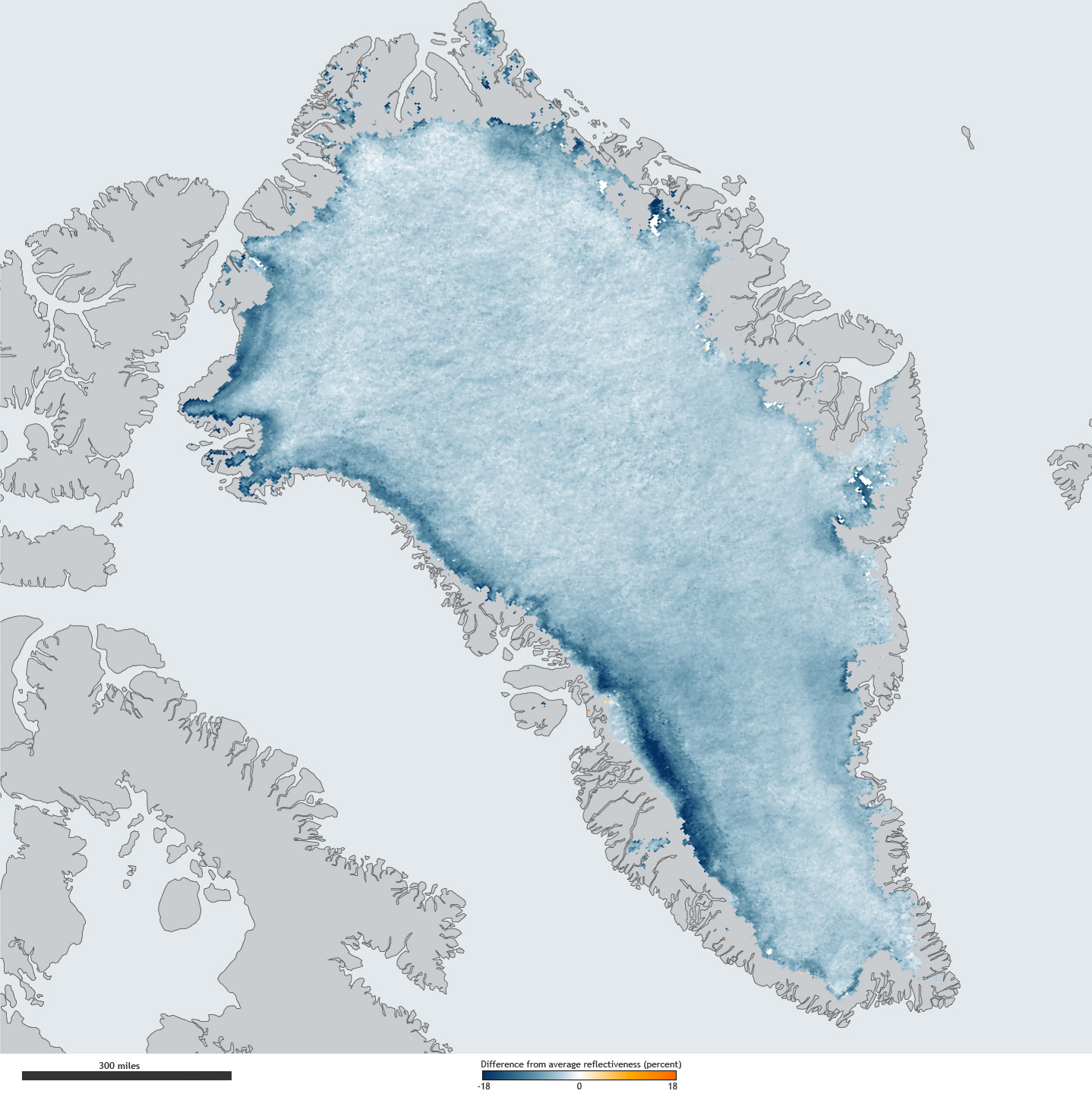

When I was in university, I had the privilege of working for the Canadian government doing research in preparation for the first RADARSAT satellite. When I was there in 1991, RADARSAT was still four years in the future, but the Europeans launched ERS-1 that summer and it carried the first spaceborne Synthetic Aperture Radar (SAR) system. Because Canada had a reliable airborne SAR system, we helped the Europeans calibrate their new satellite. We did that by having the satellite and the plane pass over the same stretch of eastern Lake Superior shoreline at the same time while people on the ground took measurements of the conditions that would affect radar return, such as air and water temperature, wave heights, wind speed, and so forth. The ground measurements and airborne radar images could be compared and then the ERS-1 images could be calibrated against both of them. Once calibrated to real-world data, the satellite SAR could image any other areas of the Earth's surface and the radar image could be reliably interpreted to describe the surface conditions. |

| The Convair 580 C-X SAR Aircraft that helped calibrate the ERS-1 satellite in 1991. Source |

The lesson I learned from those months doing radar research is that even the most complex sensors are only useful if they are calibrated against a real-world source.

Implications for Business Intelligence

As we move into the age of the Internet of Things (IoT), this problem will only become more prevalent. If we base any important decisions on a single measurement source, we stand the risk of getting fooled by a faulty sensor. Of course, it's not just technical gizmos that can fail. Any single source of data can be faulty, including someone's spreadsheet.When I worked in the hospital sector in the late 1990's, the government funding agency sent out a spreadsheet showing how the complexity of cardiac surgery cases had mysteriously dropped during the first 6 months of that year across all cardiac hospitals in the province. As a result, they were clawing back over a million dollars in funding based on the argument that the hospitals were taking simpler cases and were redirecting the funds elsewhere. Our hospital CEO was concerned as it is no simple exercise to find money half way through a budget year. A colleague of mine was assigned to discover if this complexity drop was real, so the first thing he did was talk to the Chief of Cardiac Surgery. Were cardiac surgery cases getting simpler? On the contrary, he answered, they were getting noticeably more complex. It was the same story when he spoke to surgeons at other hospitals. My colleague then set out to uncover the discrepancy, which he eventually found as a formula error buried deep in the spreadsheet. He corrected the spreadsheet, sent it back to the government, and a few days later they sheepishly sent out the corrected spreadsheet showing a slight increase in cardiac surgery complexity across the province. The funding clawback was quietly cancelled. It was sloppy spreadsheet work on the government's part for sure, but the approach to solving the problem began by getting a second measurement source, in this case by having a conversation with a surgeon.

It reminds me of Segal's Law, which states:

A man with a watch always knows what time it is.

A man with two watches is never sure.

A man with two watches is never sure.

Every watch has a measurement error of some degree, but you are only confronted with that error when comparing to a second watch. When looking at one watch, we can easily forget about its inherent inaccuracies. NASA was looking at one watch for a decade and it was sure it had the correct time. Even the experts can make simple mistakes.

When it comes to any important business decisions that you are basing off of your BI metrics, always make sure you have a second watch.

Source: David Boettcher. Notice how these watches differ?