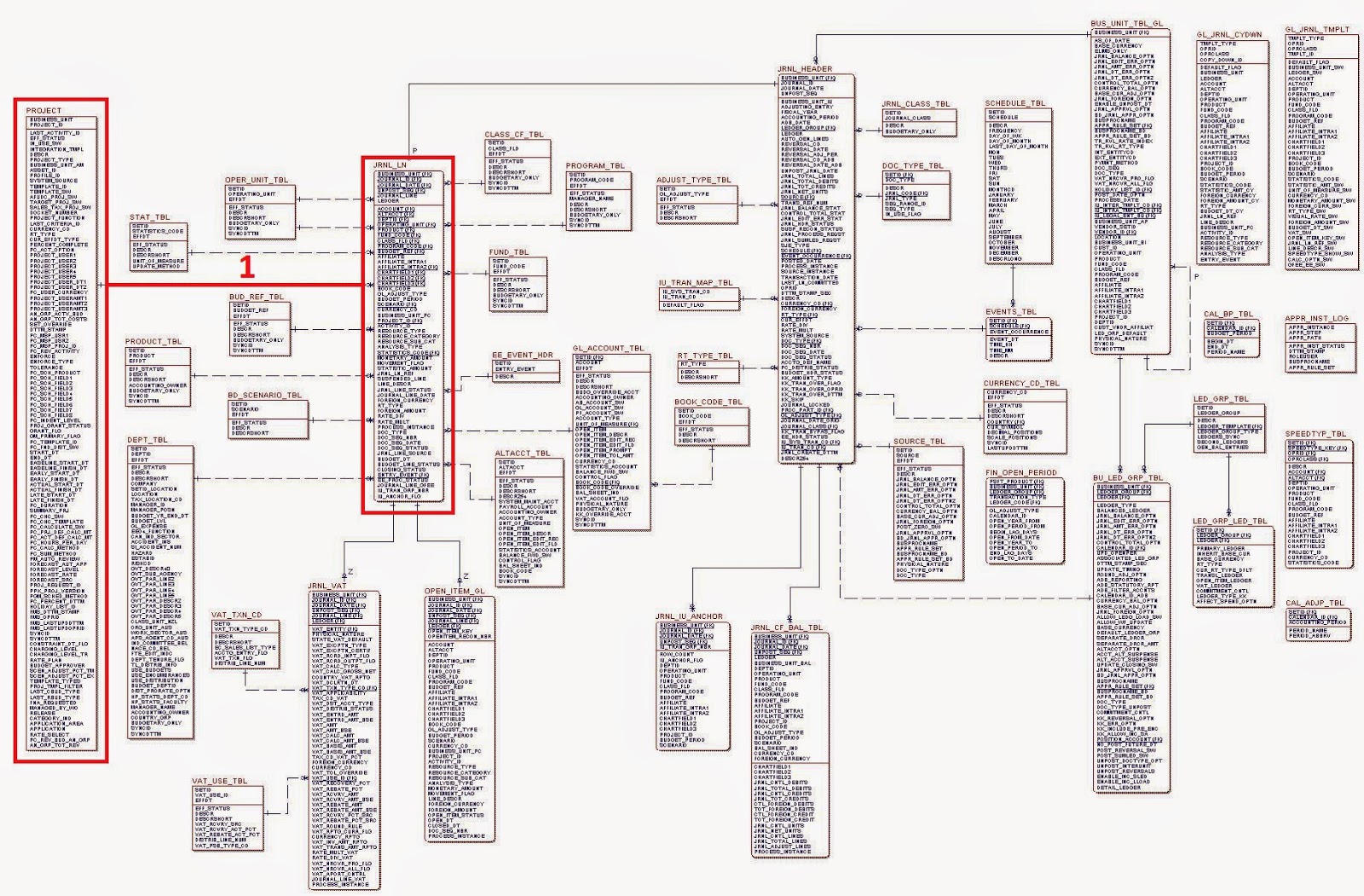

Snowflake Schema

The Star Schema is a de-normalized data structure designed to optimize aggregation query response times. It contains a fact table surrounded by a number of entity tables.A common expansion of the Star Schema is the Snowflake. A Snowflake Schema is simply a Star Schema where one or more of the entities have been partially normalized. This means some of the repeated details for an entity table have been hived off into a separate details table to reduce data repetition in the main entity table. An example of a Snowflake Schema is below.

Note that the original Star Schema tables are still there, but new Entity Details tables have been added around the edges, making it roughly resemble a snowflake.

Snowflake Schemas are rarely symmetric. Some entities may have multiple detail tables (such as Entity 1 above with 3) while other entities may have none (Entity 2).

What type of data is stored in the Entity Detail tables? Anything that is repeated frequently in the main Entity tables. Hierarchy levels or long textual description fields are common candidates for snowflaking.

For example, an Entity called Cost Center may have a hierarchy starting at the top with Company, then going down to Business Unit, Department and Cost Center. The facts are stored at the cost center level, so the Company, Business Unit and Department values will be repeated frequently in the entity table. Therefore, those details could be put in a separate entity details table called Cost Center Hierarchy with the cost center key remaining in the main entity table.

Avoid Snowflaking

Now that we've reviewed what the Snowflake Schema is, this is why you should avoid using it: Snowflaking works against the sole purpose of the Star Schema.By normalizing an intentionally de-normalized data structure, the additional joins slow query response time in order to save a bit of disk space. This is ridiculous when the only reason the Star Schema exists is to improve query response time. Most instances where I have seen snowflaking used in real-world data models has been the result of a DBA (database administrator) returning to old habits and normalizing tables because that's what they've always done in the world of OLTP. It's a tough habit to break.

One Exception: Bridge Tables

A situation that diagrammatically resembles a Snowflake Schema is a Bridge Table, although I do not consider it snowflaking. A bridge table contains the conversion mapping logic between two related entity tables.Consider two data marts, each with their own star schema. One mart contains sales data and the other manufacturing data. We want to connect the two fact tables through the Region entities to permit analysis of sales and production data together. One way to accomplish this is to conform the Region dimensions so sales and manufacturing use the same regional boundaries. That would permit a single entity table to be used by both fact tables. However, that is not always possible from a business perspective. Sales divides geography based on the number of customers and prospects in an area while manufacturing divides geography based on factory and warehouse locations. Forcing sales to use manufacturing's boundaries or vice-versa would create a lot of unnecessary hassles for the business with essentially no real benefits.

An alternative is to use a Bridge Table that equates sales regions and production regions. For example, Toronto may be in sales region Central East but in production region Ontario. The diagram below shows the Regions Bridge Table linking these two data marts.

Again, this appears similar to a snowflake of the Regions entities, but it actually involves no normalization so I don't consider it snowflaking.

A different definition of a Bridge Table exists that states they resolve many-to-many relationships between entities, but those situations should typically be resolved through the fact table. Bridge Tables should resolve different aggregation standards for similar entities. In addition to different geographical entities (described above), time is another frequent candidate for bridging when different data marts require different fiscal reporting periods.

Outrigger Tables

One other term that deserves comment comes from Ralph Kimball, one of the grandfathers of data warehousing and star schemas. While he strongly advises against snowflaking, he does permit some normalization of the star schema using something he calls Outrigger Tables. I will let him define it from his website:"A dimension can contain a reference to another dimension table. For instance, a bank account dimension can reference a separate dimension representing the date the account was opened. These secondary dimension references are called outrigger dimensions. Outrigger dimensions are permissible, but should be used sparingly. In most cases, the correlations between dimensions should be demoted to a fact table, where both dimensions are represented as separate foreign keys."One of Ralph's colleagues, Margy Ross, elaborates further:

"Outriggers are similar to snowflakes in that they’re used for many-to-one relationships, however they’re more limited. Outriggers are dimension tables joined to other dimension tables, but they’re just one more layer removed from the fact table, rather than being fully normalized snowflakes. Outriggers are most frequently used when one standard dimension table is referenced in another dimension, such as a hire date attribute in the employee dimension table."Margy continues:

"Like many things in life, outriggers are acceptable in moderation, but they should be viewed as the exception rather than the rule. If outriggers are rampant in your dimensional model, it’s time to return to the drawing board given the potentially negative impact on ease-of-use and query performance."While I agree with Margy that outriggers should be used sparingly, I fail to see a distinction between snowflaked tables and outriggers. The only apparent difference is that if snowflaked tables are small, then we can call them "outriggers" and they become permissible. I don't see the need for such a distinction. Snowflakes are snowflakes, even though they come in different sizes.

Avoid snowflaking at all costs. If there are too many outriggers or bridges in your model, it usually means the fact table is the problem and it needs to be split into two (or more) fact tables. Further discussion about cube design will follow in a later post.