Step 3 - Designing the Dimensions

So far we've looked at distinguishing the facts from the dimensions in the source data and ensuring each fact table is at one common grain. Now we can focus on designing the dimensions.With dimensions, you really only have two main design decisions:

- Which dimensions are required in this cube?

- What will the dimension hierarchies be, if any?

Some dimensions are easy to design, such as Time. Everyone knows that Days roll up to Weeks, roll up to Months, roll up to Quarters, roll up to Years. However, it is not always clear which data elements belong together in one hierarchy and which ones deserve their own dimensions.

For example, the data element Sales Region rolls up to Sales District and drills down to Customer. It's obvious those elements belong together in one hierarchy. However, Sales Manager rolls up to Sales Director and also drills down to Customer. Customer is common to both potential hierarchies. Should the Sales Managers and Directors be part of the Sales Region hierarchy or should they be in their own dimension? Or should they be an alternate hierarchy above Customers in one dimension? The answer depends on the specific nature of these data elements for this particular company.

I have found there are two guidelines that have helped me answer these dimension design questions:

- Eliminate ALL unnecessary dimensions and hierarchy levels from the cube

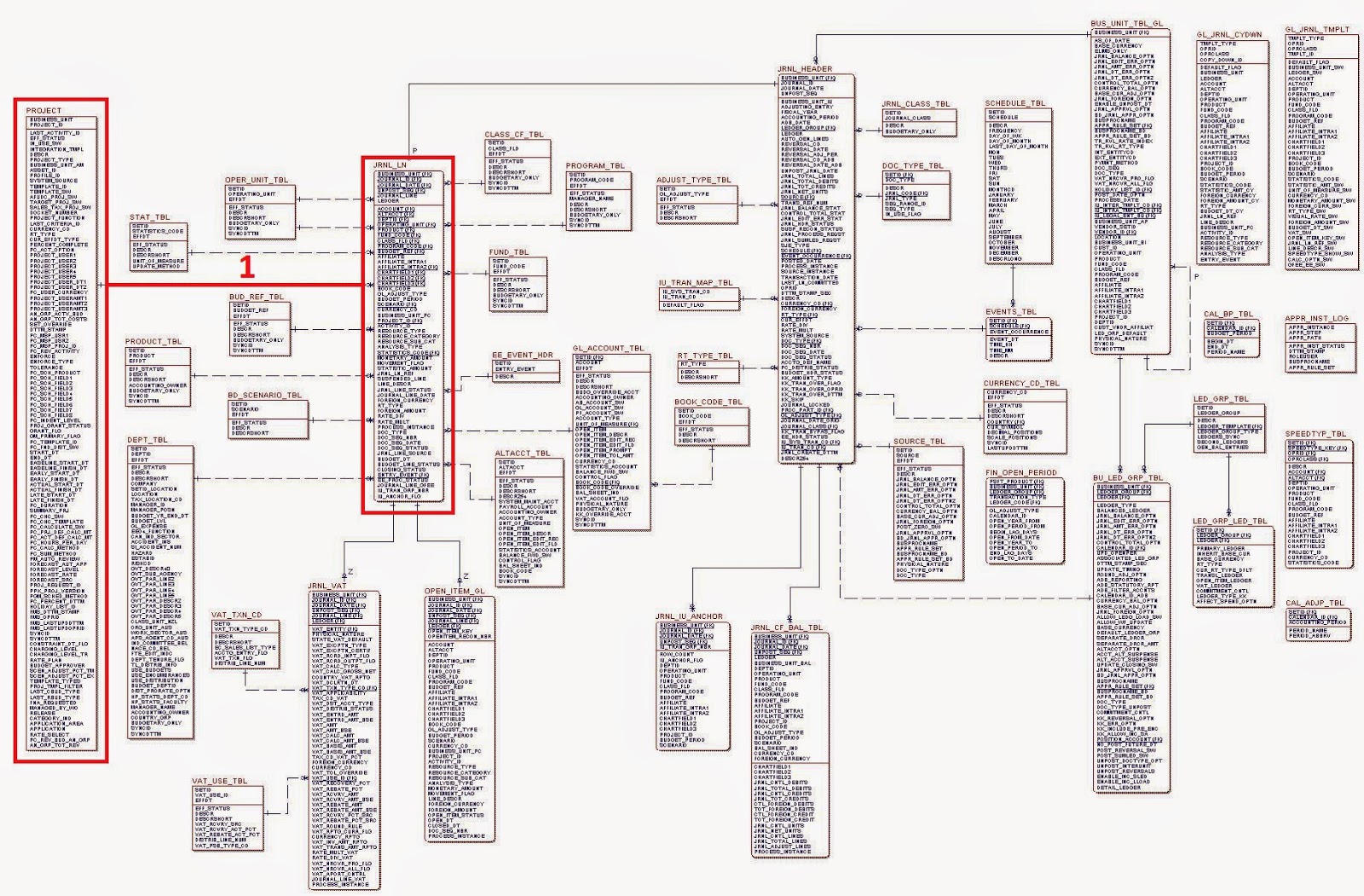

- Use Entity-Relationship diagrams to guide the dimension design

Eliminate Unnecessary Dimensions and Hierarchy Levels

The common temptation I have seen in many workplaces is to build a cube that can answer every ad hoc question that could ever be asked of the data. That is the worst thing anyone could do when designing a cube. Always design cubes to accomplish a particular purpose. By implication, this means the cube will not accomplish every purpose. That's OK because cubes designed to accomplish all purposes usually end up accomplishing none of them.If none of your organization's reports contain weekly summary levels, then don't include weeks in your time dimension hierarchy. If your scheduling system books staff by the shift, then don't build a reporting cube that shows hourly schedules. Keep your cubes consistent with your business processes.

When I review cubes and ask why certain dimensions have been designed the way they are, there are some terms that can indicate unnecessary dimensions in a cube:

- Just in case: "We added Weeks to the Time hierarchy just in case someone would want to build a weekly report." If no one has had a business need to build a weekly report yet, don't presume that business need is suddenly going to appear after you build your cube.

- Future requirement: "We built hourly schedule reporting functionality because the HR manager said that they might consider moving to hourly staff scheduling in a couple years." Don't build to imagined future requirements. Build to today's requirements to ensure you meet today's requirements. Failing to meet today's requirements but meeting non-existent future requirements does not equal success.

- One person asked for it: "We didn't see any existing reports with this dimension, but one user requested it." If that one user is the project owner who is funding this cube, then it's probably a good idea to include it. Otherwise, leave it out.

- It was in the source data, so we put it in the cube: "We put every data element we found into the cube." This may sound comprehensive but it is too large a purpose for one cube. Most source systems have data elements that are no longer used, so including everything guarantees a cluttered cube.

The second guideline will be tackled in the next post.